Redirect 301 HTAccess

May 04, 2021

301 Redirects

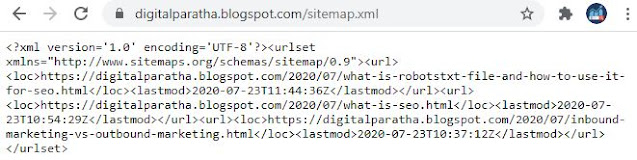

This is the cleanest way to redirect a URL. Quick, easy, and search-engine friendly. Remember HTAccess stuff is for Apache servers only.

Redirect a Single Page

Redirect 301 /oldpage.html http://www.yoursite.com/newpage.html

Redirect 301 /oldpage2.html http://www.yoursite.com/folder/

Redirect an Entire Site

This way does it with links intact. That is www.oldsite.com/some/crazy/link.html will become www.newsite.com/some/crazy/link.html. This is extremely helpful when you are just “moving” a site to a new domain. Place this on the OLD site:

Redirect 301 / http://newsite.com/